AI

Designing AI for Researchers: Lessons from 6 Months of Remy

Anthony Lam

February 25, 2026

Market Research

Articles

AI

Designing AI for Researchers: Lessons from 6 Months of Remy

Anthony Lam

February 25, 2026

Market Research

Articles

AI

64 AI Market Research Prompts for Data Analysis

February 25, 2026

Market Research

Articles

AI

64 AI Market Research Prompts for Data Analysis

February 25, 2026

Market Research

Articles

Advanced Research

9 Essential Questions for Evaluating Employee Satisfaction Software

February 20, 2026

Employee Research

Articles

Advanced Research

9 Essential Questions for Evaluating Employee Satisfaction Software

February 20, 2026

Employee Research

Articles

Advanced Research

How to Evaluate Market Research Vendors for Global Reach

Team Remesh

February 10, 2026

Market Research

Articles

Advanced Research

How to Evaluate Market Research Vendors for Global Reach

Team Remesh

February 10, 2026

Market Research

Articles

Advanced Research

3 Early-Stage Research Methods to Gather Consumer Insights

Team Remesh

January 27, 2026

Market Research

Articles

Advanced Research

3 Early-Stage Research Methods to Gather Consumer Insights

Team Remesh

January 27, 2026

Market Research

Articles

.avif)

Advanced Research

Why Agencies Should Embrace AI Tools for Market Research

Team Remesh

January 26, 2026

Articles

.avif)

Advanced Research

Why Agencies Should Embrace AI Tools for Market Research

Team Remesh

January 26, 2026

Articles

Advanced Research

The Top Market Research Companies for the CPG Industry

Team Remesh

January 20, 2026

Market Research

Articles

Advanced Research

The Top Market Research Companies for the CPG Industry

Team Remesh

January 20, 2026

Market Research

Articles

Advanced Research

The Most Cutting-Edge Consumer Insights Software of 2026

Team Remesh

January 5, 2026

Market Research

Articles

Advanced Research

The Most Cutting-Edge Consumer Insights Software of 2026

Team Remesh

January 5, 2026

Market Research

Articles

Research 101

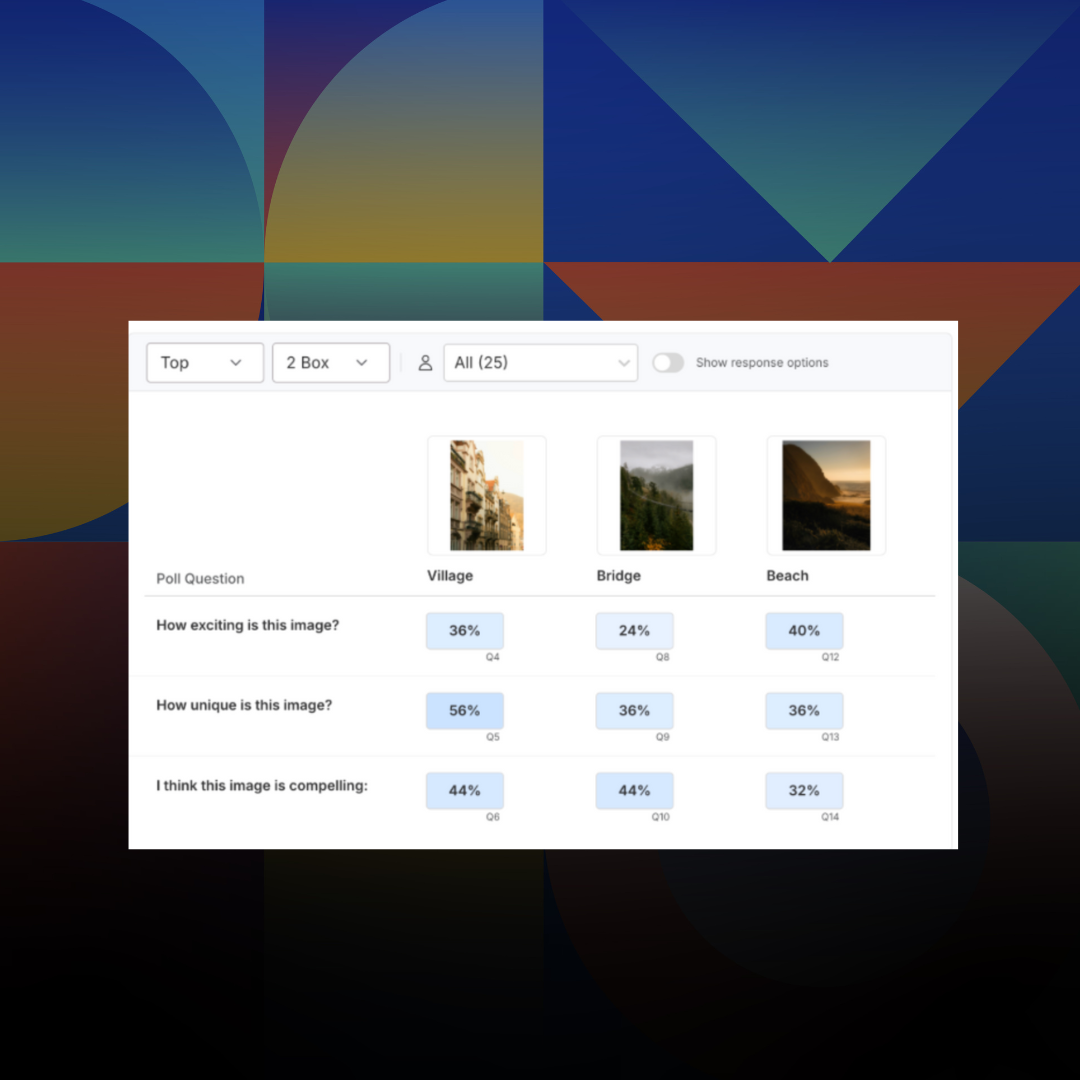

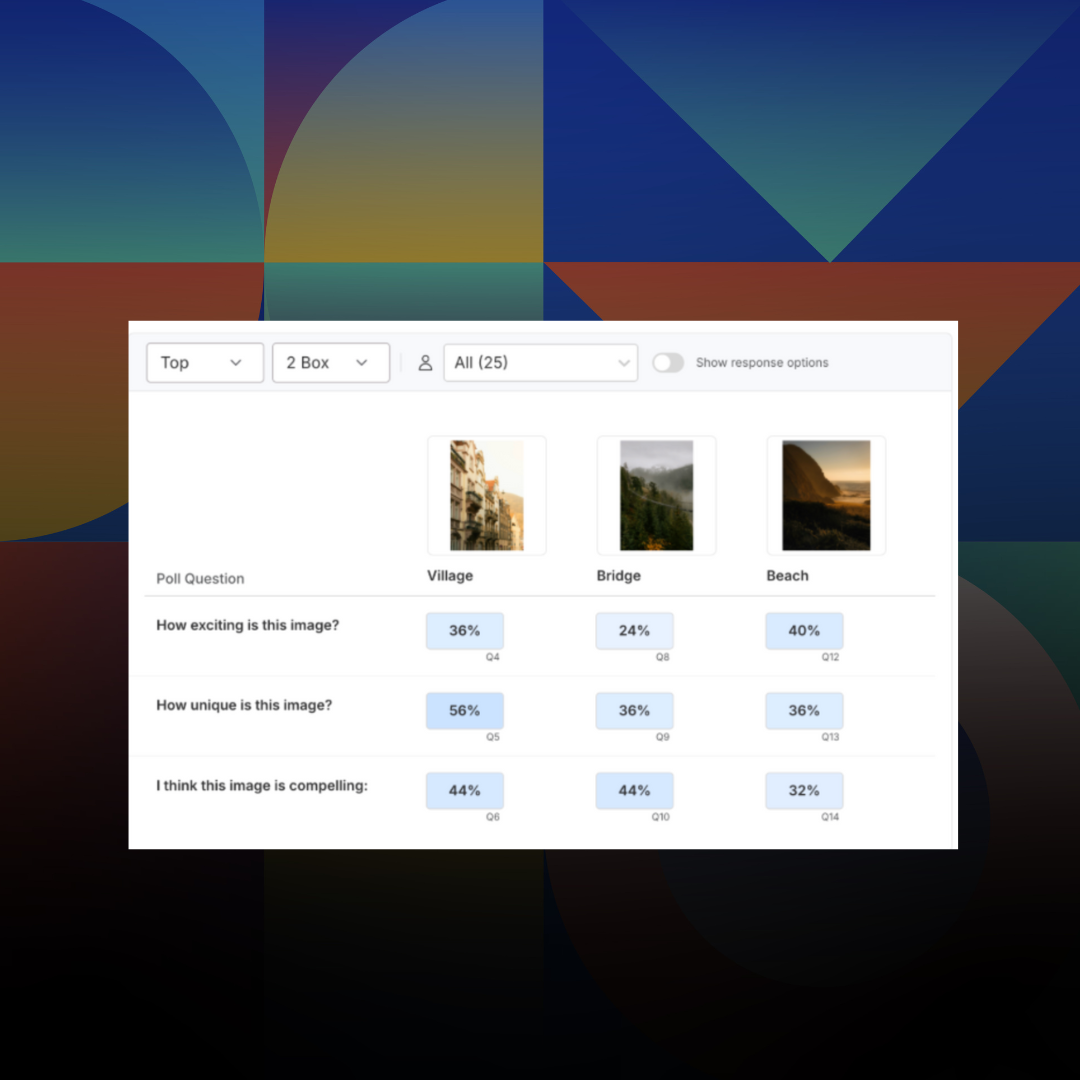

Introducing: Poll Comparison - Streamline Concept Testing and Make Better Decisions Faster

Emmet Hennessy

November 24, 2025

Market Research

Articles

Research 101

Introducing: Poll Comparison - Streamline Concept Testing and Make Better Decisions Faster

Emmet Hennessy

November 24, 2025

Market Research

Articles

Agentic AI for Research: A Practical Primer

This primer explains how to distinguish AI agents from rules-based systems, identify when agentic design adds value, and apply it in ways that preserve interpretability, control, and stakeholder trust.

Understanding what agentic AI is, where it fits, and how to evaluate its claims.

Estimated Reading Time: 8–10 minutes

Developed in collaboration with Suzanne Walsh, Principal Researcher @Remesh.

Executive Summary

- Modern AI agents pursue goals autonomously, using reasoning, context awareness, and a defined set of tools. Operating in an “observe, reason, act, evaluate” loop, an agent can adapt in unpredictable environments where static workflows fall short.

- In qualitative research, this flexibility can surface richer insights, but it also introduces risks when consistency, transparency, and auditability are critical.

- Many so-called “agentic systems” are structured workflows enhanced by large language models rather than fully autonomous decision-makers.

- This primer explains how to distinguish agents from rules-based systems, identify when agentic design adds value, and apply it in ways that preserve interpretability, control, and stakeholder trust.

Why Agentic AI Needs Clarity

“Agentic AI” has become a popular term in technology marketing, but for researchers, definitions are often vague or misleading. What makes a system agentic? What are agents? Is any AI tool with autonomous features agentic? How should researchers evaluate the risks and benefits?

These questions matter because the answers shape how insight teams approach automation. Misunderstanding or over-applying agentic systems can create unpredictability where structure is required. Used thoughtfully, they can support deeper, more adaptive forms of qualitative work.

This primer defines the core concepts behind modern AI agents, offers a lens for assessing their use in research, and outlines practical guidance for adoption.

What Is an AI Agent?

An AI Agent is effectively a program that independently decides which actions to take to achieve a defined goal. Unlike scripted systems that follow fixed instructions, agents operate in a feedback loop:

they assess their environment, select an action, execute it, evaluate the outcome, and adjust the next step accordingly.

This loop, combined with the ability to choose actions based on dynamic, unpredictable context, sets agentic AI apart. Traditional decision-making systems depend on pre-programmed rules or specific combinations of inputs chosen in advance. By contrast, agentic systems can determine for themselves which pieces of prior knowledge, new information, and environmental signals to draw on, whether individually or in any combination, without those combinations needing to be anticipated by the user. This adaptability is especially valuable when the relevant factors cannot be fully categorized ahead of time or when the possibilities are too numerous to predefine.

In qualitative research, the difference between a rules-based system and an AI agent becomes clear. A rules-based system, such as a scripted chatbot, follows a predefined flowchart to ask questions in a fixed order. Once the flow begins, it only follows the paths defined in advance, without adapting in real time to unexpected inputs. For example:

- Online survey tools like Qualtrics or SurveyMonkey, where skip logic routes participants through fixed paths based on prior responses.

- Research community platforms (e.g., Recollective, CMNTY, or Fuel Cycle) where moderators set up automated discussion guides that follow a preset sequence.

- Customer experience/chat intercept tools (e.g., a website help bot) that follow a fixed “if–then” script to guide a user to FAQs or escalation.

- In-platform onboarding bots for research software that walk participants through instructions step-by-step in a rigid order.

By contrast, an agentic AI decides in real time what to do next based on prior answers, new inputs from other participants, and its own embedded knowledge. It not only determines the next action but also evaluates which information is most relevant to making that choice. For example:

- In a live focus group, an agent could adapt the discussion flow mid-session based on emerging participant themes, asking probing questions that weren’t in the original guide.

- In an asynchronous study, it could surface earlier comments from other participants to spark debate or prompt elaboration from someone with a differing viewpoint.

- During longitudinal research, the agent could recall and reference what a participant said in a prior week’s activity, tailoring follow-up questions for deeper insight.

This capacity for goal-oriented, context-aware decision-making, and the ability to synthesize new and historical information without pre-scripted paths, is what defines the modern concept of AI agents.

AI Agents: Core Components

Agentic AI describes a family of interrelated concepts and technologies in which agents do more than analyze or predict. They act on their environment. Modern agents differ from classical ones in that the “why” and “how” behind their actions are not always pre-defined by programmers, which allows them to adapt dynamically to new situations.

Agents are often described through three interconnected components, Observations, Actions and Decision-making:

Observations

Every agent operates based on its ability to perceive aspects of its environment. The environment might be a conversation on a qualitative research platform, an e-commerce site, or even the agent’s own stored knowledge. Observations could include prior messages, participant data, relevant documents, or the agent’s inherent knowledge base. Adding or removing information changes the environment and may shift how the agent perceives its task.

Understanding what the agent sees is only part of the picture. Equally important is why the agent is looking at that information in the first place. This “why” gives meaning to the observations and directly shapes how they are used in decision-making.

Actions

An agent’s defining capability is its ability to act on its environment. Actions can include querying a dataset, generating a follow-up question, sending a targeted prompt, recommending a next step, or escalating a case to a human moderator. The more diverse and flexible the set of actions, the greater the agent’s ability to handle varied and unexpected scenarios.

On a live discussion platform, for instance, a scripted chatbot might only display the next pre-written question. An agentic AI, however, could notice that a participant’s response contains an unexpected term related to an emerging trend. It might choose to:

- Search prior participant responses for mentions of that trend.

- Ask the participant a clarifying question.

- Summarize the insight and flag it for the researcher in real time.

Decision-making

Once an agent has gathered observations and identified possible actions, it must decide which action or combination of actions will best move towards achieving its goal. This reasoning process links context to behavior, whether through simple rules or more complex goal-based logic.

Reflexive agents operate on fixed rules. For example, if a self-driving car detects an object within one foot, it brakes immediately. The agent does not need to understand concepts like safety or collisions. It simply knows that the preconditions for braking have been met. Modern agentic systems, on the other hand, often use large language models to evaluate multiple, unpredictable variables at once. They weigh these factors against the agent’s purpose and adapt as conditions change.

In qualitative research, decision-making might occur when an AI agent detects an emerging theme during a live discussion. Based on its goal, such as exploring participant motivations, the agent could choose to:

- Ask a targeted follow-up question to the participant who introduced the theme.

- Cross-reference earlier responses to see if the theme appears elsewhere.

- Adjust the discussion flow to probe the theme with the entire group.

In this way, decision-making becomes a continuous and adaptive process rather than a fixed sequence of predefined actions.

AI agents today are differentiated from their classical counterparts by their means of decision making. They are typically goal-based, and, using LLMs, can perform generalized, unstructured reasoning. Agents can draw on relevant aspects of their observations to select appropriate actions, then re-incorporate the results of those actions back into their understanding of the environment. This feedback loop enables them to refine their approach over time, making them particularly well-suited for unpredictable or rapidly changing research contexts.

When AI Agents Add Value

Agents are most effective when tasks involve multiple decisions, unpredictable inputs, and the need to evaluate results mid-process. These conditions often arise in qualitative research, where participant contributions cannot always be anticipated and static workflows fall short.

Instead of following a rigid script, agentic tools interpret context, choose the most suitable next step, and adjust based on the outcomes of their actions, shifts in context, and incorporates feedback. This flexibility makes them well suited to unstructured data environments where nuance matters.

Is an Agent the Right Fit?

Before adopting agentic AI, ask:

- Does the task require multiple steps or branching decision points? Agents excel when the work cannot be completed in a single pass and must adjust course along the way.

- Are the inputs ambiguous or likely to evolve as work progresses? Adaptive reasoning is most valuable when the system must interpret unclear signals or incorporate new information on the fly.

- Would performance improve if the system could adapt without constant human intervention? The real advantage of agentic AI is reducing the need for manual oversight while maintaining or improving quality.

If the answer is yes to all three, an agentic approach is likely to deliver better adaptability, richer insights, and greater efficiency in dynamic conditions, such as an AI agent moderating a live discussion by detecting emerging themes and adjusting questions in real time based on participant responses. If not, a simpler, rules-based method may produce faster, more predictable results without unnecessary complexity, such as a survey tool using skip logic to route participants through fixed questions or a scripted chatbot that follows a pre-set decision tree.

Limits and Misconceptions

Not every task will benefit from an agentic approach. In contexts where reproducibility, auditability, or compliance is essential, agents can introduce risk. Because agentic systems use non-deterministic reasoning loops, their outputs can vary even when given the same inputs. This variability is not necessarily a flaw and is often the reason they can adapt so effectively, but it can be a problem when consistency is more important than nuance.

These limitations are particularly important in research environments where outputs must be consistent and explainable. Agentic systems, by design, do not guarantee repeatability, transparent logic paths, or predictable edge-case behavior. These qualities are often required for regulated decisions, long-term tracking, or client deliverables.

When an Agent Was the Wrong Tool

Not every task will benefit from an agentic approach. In contexts where reproducibility, auditability, or compliance is essential, agents can introduce risk. Because agentic systems use non-deterministic reasoning loops, their outputs can vary even when given the same inputs. This variability is not necessarily a flaw and is often the reason they can adapt so effectively, but it can be a problem when consistency is more important than nuance.

These limitations are particularly important in research environments where outputs must be consistent and explainable. Agentic systems, by design, do not guarantee repeatability, transparent logic paths, or predictable edge-case behavior. These qualities are often required for regulated decisions, long-term tracking, or client deliverables.

Market Adoption and Industry Perspectives

Despite strong interest and bold projections, the adoption of agentic AI remains measured and highly selective. Surveys frequently reveal a gap between enthusiasm and execution.

Agentic AI Adoption at a Glance

- 90% of IT leaders expect agentic systems to improve workflows, but only 37% report deployment (UiPath, 2025)

- Just 2% of enterprises have scaled these systems across business units (Capgemini, 2025)

Most deployments remain limited to pilot programs or low-risk internal tools. Tasks that involve compliance, external reporting, or regulated processes are rarely entrusted to fully agentic systems.

Why This Matters for Researchers

For qualitative researchers and insight teams, the industry’s cautious approach to agentic AI is more than a technical observation. It is a signal about where risks are most acute and where standards must be highest. Three concerns stand out:

Lack of Transparency

Agentic systems can generate explanations for their decisions, but these are produced by the same large language models that generate the decisions themselves. Because these models operate probabilistically, explanations can include hallucinations or fabricated reasoning. This reduces reliability and can make it harder for researchers to defend or contextualize findings with confidence.

Limited Auditability

In any research or operational setting where consistent methodology is critical, agentic tools can unintentionally shift baselines or introduce drift because due to their non-deterministic decision making. Variability in outcomes can distort baselines and create challenging conditions for trend analysis.

Edge-case Unpredictability

In exploratory research, edge cases often hold the most valuable signals, but they also carry the highest risk if mishandled. When probing new segments or behaviors, researchers need consistency in how responses are interpreted so they can compare like with like. LLMs in general tend to “soften” outlier data towards the mean, undercutting attempts to identify and highlight critical edge cases. When coupled with LLM-based decision-making, critical insights might be lost, obscured, or ignored altogether within a complex agentic pipeline.

These risks help explain why McKinsey’s 2025 AI Brief reports that many deployments described as agentic are, in practice, structured workflows enhanced by large language models. Georgian Partners, in collaboration with NewtonX, similarly found that most such systems still operate under human supervision and are typically limited to narrowly defined applications. Together, these findings indicate that the true use of autonomous, decision-making agents in the field remains far less common than marketing narratives suggest.

Because these risks directly affect data quality, comparability, and stakeholder trust, researchers must have clear visibility into how agentic systems function and the ability to intervene when necessary. Principles such as interpretability, auditability, and control should guide every decision about using agentic tools in a research context.

Implication for the Research Community

Agentic systems can deliver efficiency and flexibility, but research teams often place higher value on control, consistency, and clarity. Industry caution reflects a shared concern: without traceable reasoning and methodological integrity, insights can lose reliability and stakeholder trust.

This does not mean agents should be avoided. Their adoption should be evaluated through a practical lens. Will the system improve your ability to interpret, explain, and replicate its behavior? If the answer is no, the risks may outweigh the benefits.

Whether a system is truly agentic or simply enhanced by large language models, researchers must have clear visibility into how it operates and the ability to intervene when necessary. Interpretability, auditability, and control should guide every decision about integrating these tools into a research workflow.

Practical Guidance for Researchers

If you are considering adopting agentic AI tools, keep these practices in mind:

- Interrogate the behavior: Confirm the system is reasoning and adapting, not simply executing a fixed sequence.

- Match the tool to the task: Reserve agentic tools for work that benefits from adaptability, not for predictable, rules-based processes.

- Demand visibility: Every output should be explainable and traceable, even if generated by probabilistic models.

- Limit scope when needed: Keep researchers in the loop for complex or high-risk scenarios to avoid over-automation.

The objective is not to replace human researchers but to extend their reach in contexts where adaptability, speed, and contextual judgment add the most value.

Final Thoughts: Beyond the Buzzword

Agentic AI has quickly become a buzzword in technology marketing, but for research professionals its value depends on application, not terminology. At its core, agentic AI is a system design approach. When implemented with intention, it can deliver more responsive, contextual, and efficient insight generation.

Not every use case benefits from agentic design. Applied without a clear purpose, it can introduce variability, obscure decision logic, and reduce transparency. For insight teams, the decision is not simply whether to adopt agentic AI. The imperative is to use it with precision, applying it where adaptability is essential and maintaining the interpretability, auditability, and control that make insights actionable. In doing so, researchers can move past the hype and turn agentic AI into a tool that advances the quality and impact of their work.

Further Reading and Citations

Industry Reports

- Capgemini. Agentic AI in the Enterprise: Adoption, Barriers, and Outcomes (2025)

- UiPath. State of Agentic AI: 2025 Industry Trends

- Georgian & NewtonX. Agentic Platforms and Applications

- McKinsey & Company. Seizing the Agentic AI Advantage

Academic Sources

- Sapkota, R. et al. “AI Agents vs. Agentic AI: A Conceptual Taxonomy.” arXiv, 2025.

- Mamillapalli, S.K. “The Agentic AI Framework: Enabling Autonomous Intelligence.” IJIRMPS, 2025.

- Mukherjee, A. & Chang, H. “Agentic AI: Autonomy, Accountability, and the Algorithmic Society.” arXiv, 2025.

Want to see AI-powered research in action? Get a tailored demo to see how Remesh is helping research teams streamline their workflow.

About the Authors

Dan Reich

Chief Technology Officer, Remesh

Dan Reich is the CTO at Remesh, where he leads technology strategy and product innovation to drive growth across the organization. He holds an MS in Computer Science (Interactive Intelligence) from Georgia Tech. Dan’s work focuses on applied AI/ML, with deep experience in data privacy, security, and responsible deployment of AI in research workflows.

Suzanne Walsh

Principal Researcher, Remesh

Suzanne Walsh specializes in qualitative research methodology. She holds an MA in Anthropology and an MBA in Marketing. Suzanne helps clients execute complex employee and organizational research studies and leads thought leadership at Remesh.

-

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

-

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

-

More

Stay up-to date.

Stay ahead of the curve. Get it all. Or get what suits you. Our 101 material is great if you’re used to working with an agency. Are you a seasoned pro? Sign up to receive just our advanced materials.

.png)

.png)

.png)

.png)